Constants

In our research, we have approximated the number of steps that an algorithm needs to perform by looking at its asymptotic complexity, which drops any leading constants or smaller-order terms.For any reasonable problem sizes, simplifying to the highest order term is likely to be a good approximation. But dropping the leading constant may be worrisome if complexity class improvements come with inflation in the size of the leading constant. One particularly important example of this is the 1990 Coppersmith-Winograd algorithm and its successors, which to our knowledge have no actual implementations because “the huge constants involved in the complexity of fast matrix multiplication usually make these algorithms impractical”21. If inflation of leading constants is typical, it would mean that our results overestimate the scale of algorithm improvement. On the other hand, if leading constants neither increase or decrease, on average, then it is safe to analyze algorithms without them since they will, on average, cancel out when ratios of algorithms are taken.

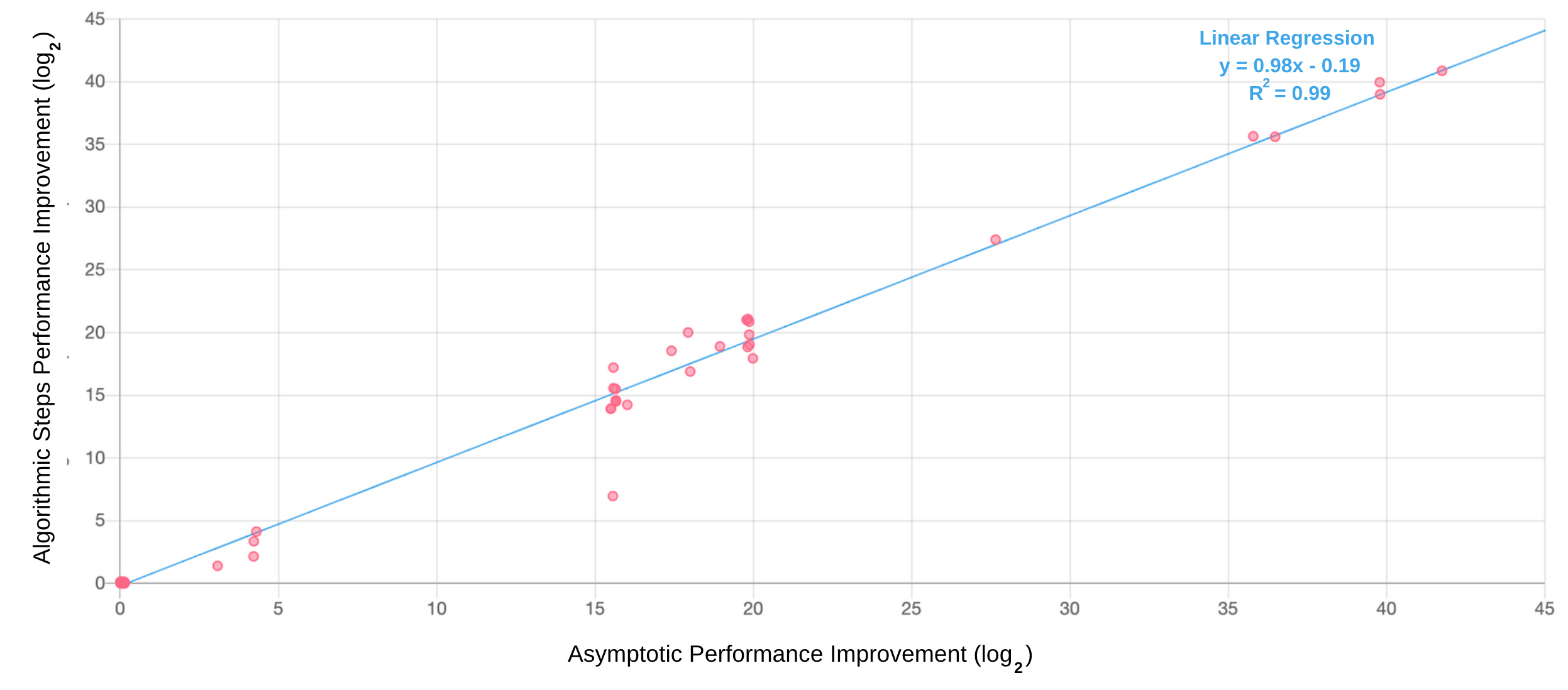

The figure shows that, for the cases where the data is available, the size of improvements to the number of algorithmic steps and asymptotic performance are nearly identical. Cases, where algorithms transitioned from exponential to polynomial time, cannot be shown on this graph because the change is too large. However, an analysis of the change in their leading constants shows that, on average, there is 28% leading constant . That said, this effect is so small compared to the asymptotic gains that it has no effect on our other estimates}. Thus, for the majority of algorithms, there is virtually no systematic inflation of leading constants. We cannot assume that this necessarily extrapolates to unmeasured algorithms, since higher complexity may lead to both higher leading constants and a lower likelihood of quantifying them (e.g. Matrix Multiplication). But this analysis reveals that these are the exception, rather than the rule. Thus, asymptotic complexity is an excellent approximation for understanding how most algorithms improve.